Your

LLMOps Platform

Build reliable LLM applications faster with integrated prompt management, evaluation, and observability.

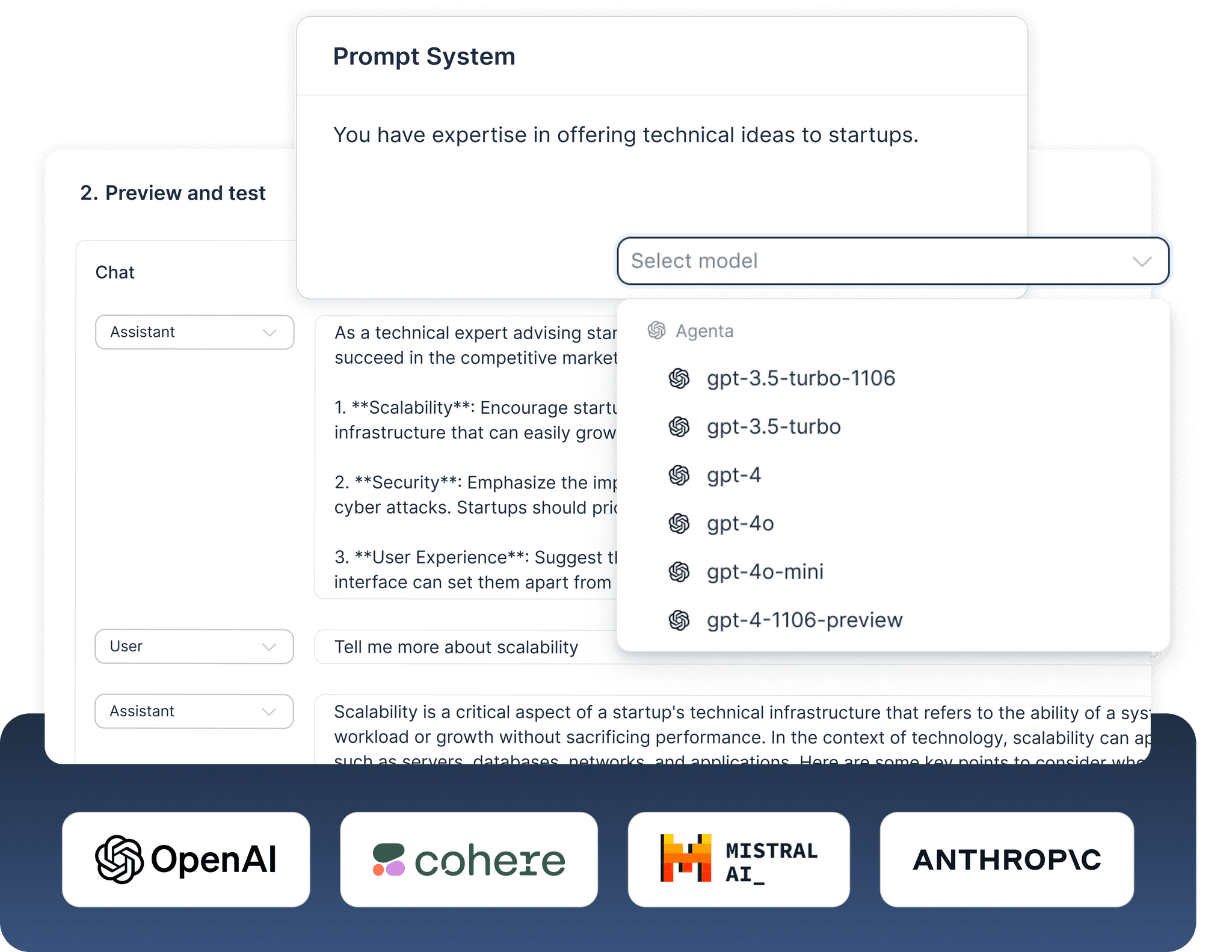

PLAYGROUND

Accelerate Prompt Engineering

Compare prompts and models across scenarios

Turn your code into a custom playground where you can tweak your app

Empower experts to engineer and deploy prompts via the web interface

PROMPT REGISTRY

Version and Collaborate on Prompts

EVALUATION

Evaluate and Analyze

Move from vibe-checks to systematic evaluation

Run evaluations directly from the web UI

Gain insights into how changes affect output quality

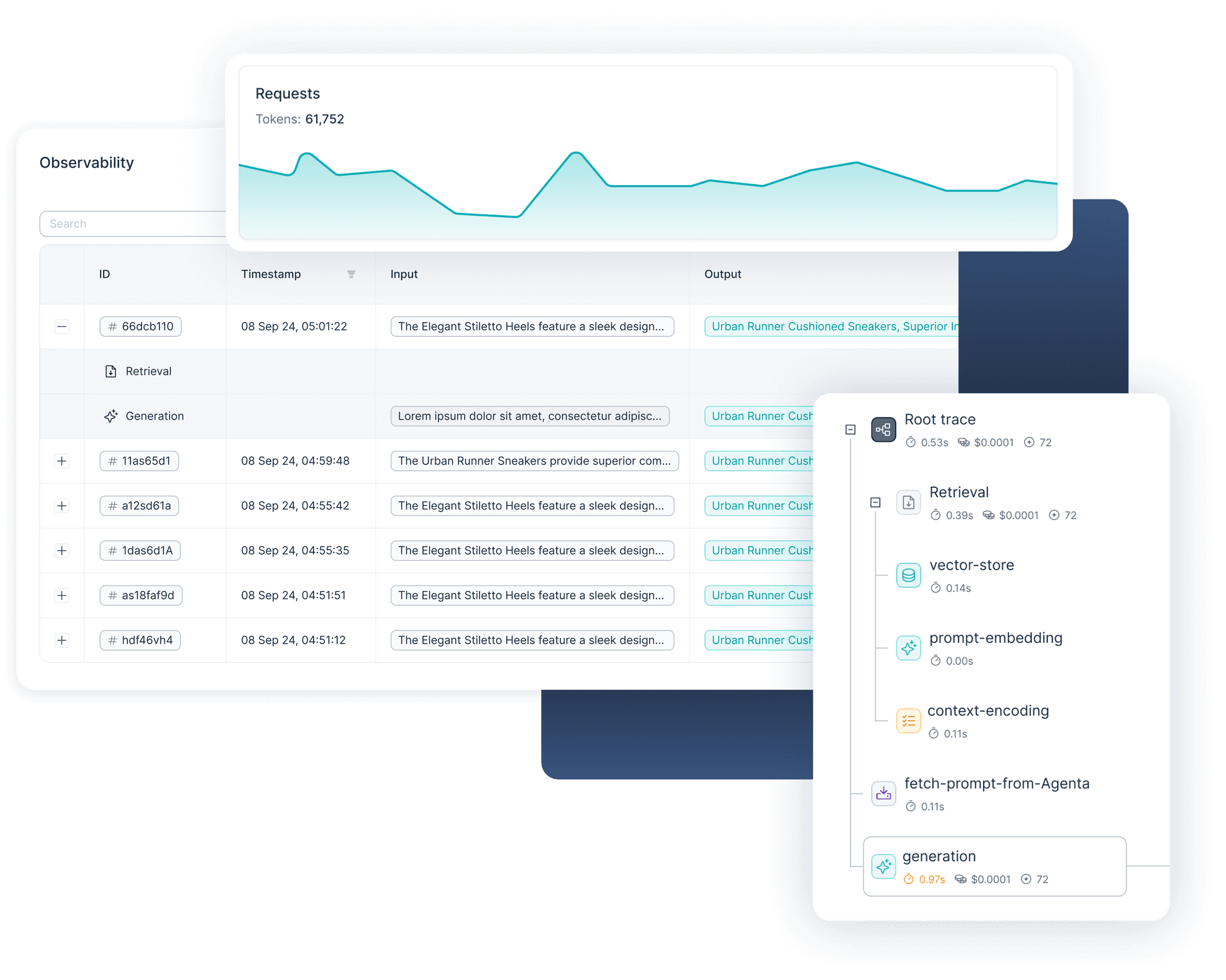

OBSERVABILITY

NEED HELP?

Create robust LLM apps in record time. Focus on your core business logic and leave the rest to us.

Have a question? Contact us on slack.

Create robust LLM apps in record time. Focus on your core business logic and leave the rest to us.